Skip to the good bit

ToggleMost songs don’t begin with instruments. They begin with language: a line scribbled in a notebook, a sentence typed in Notes, a half-formed thought about a feeling you can’t quite name yet. For a long time, that gap between words and sound was wide—and often discouraging. That’s why workflows built around Lyrics to Song feel meaningful: they acknowledge a simple truth I’ve repeatedly observed in my own experiments—many creators think in words first, not chords.

What changed my perspective wasn’t the novelty of AI-generated music, but the realization that text can act as a genuine starting interface for composition. Instead of forcing ideas into a technical framework too early, the process lets you stay in the emotional and narrative space longer, and only later decide what deserves refinement.

The Friction Nobody Talks About: Translation, Not Talent

Creative block is often misdiagnosed. It’s rarely about lacking ideas. More often, it’s about translation:

- You know the emotion, but not the harmony.

- You have lyrics, but can’t hear how they should breathe.

- You sense a chorus, but don’t know how to arrive there musically.

Traditional tools assume you already speak “music production.” That’s powerful—but exclusionary. Many ideas die simply because the cost of translating them into sound is too high.

A Shift in Perspective: Music as Interpretation, Not Construction

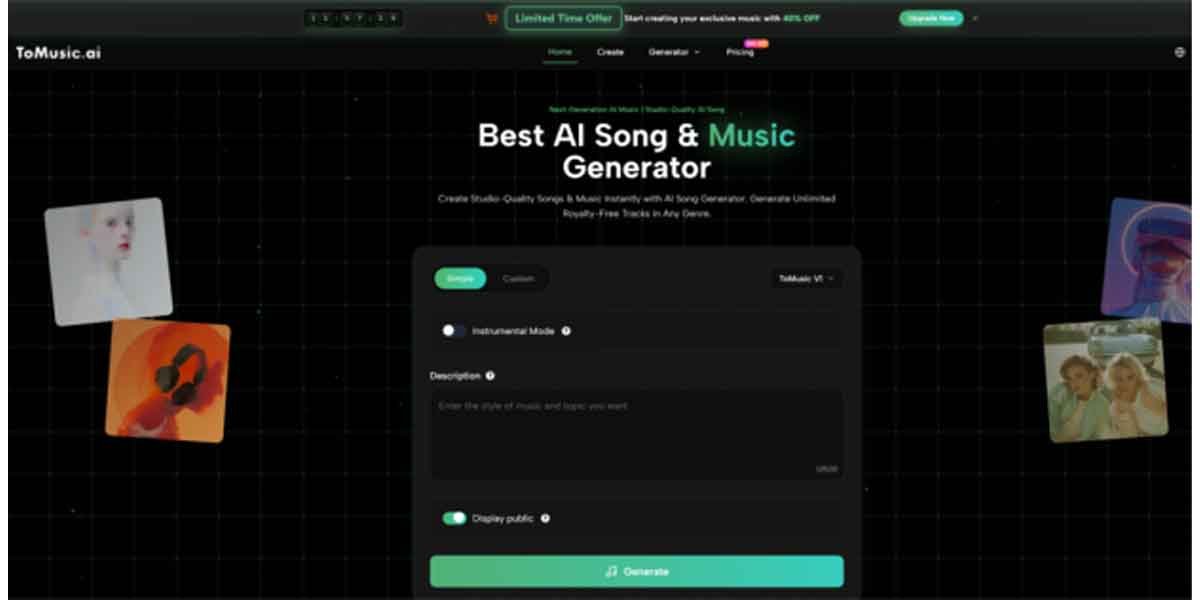

Instead of thinking “I must build a song,” ToMusic.ai encourages a different mindset: *interpretation*.

You describe intent.

The system interprets it musically.

You respond as a listener, not a technician.

Later, if you want, you can switch roles and become the producer again. But the early stage becomes conversational rather than mechanical.

In this sense, the platform behaves less like a studio and more like a collaborator that speaks a different language—one fluent in probability, patterns, and stylistic memory.

What Actually Happens Under the Hood (From a User’s Point of View)

From the outside, the process feels simple. Underneath, it’s layered.

1. You provide narrative signals

These signals aren’t just “genre tags.” They include:

- emotional descriptors

- pacing hints

- story context

- lyrical density

In my own testing, prompts that described movement (“quiet opening that slowly expands”) produced more coherent results than static descriptions (“sad pop song”).

2. The system makes compositional decisions

Without exposing technical complexity, the AI decides:

- melodic direction

- rhythmic structure

- harmonic progression

- arrangement density

This is where an AI Music Generator becomes more than a novelty. It doesn’t just output sound; it outputs a proposal for how your idea could exist as music.

3. You evaluate, not execute

Instead of asking “how do I build this,” you ask:

- Does this feel right?

- Is the emotion landing?

- Is the chorus earned?

- Does the pacing suit the imagined use case?

That shift alone can save hours.

Why This Workflow Changes Decision-Making

One subtle but important effect I noticed: when iteration is cheap, judgment becomes sharper.

When building a track manually, you tend to defend sunk costs. With AI-assisted drafts, you don’t. If version one doesn’t work, you move on without guilt. That freedom encourages experimentation—and paradoxically, better taste.

Comparing Creation Models: Language-First vs Tool-First

| Dimension | Language-first workflow (ToMusic.ai) | Tool-first workflow (Traditional DAW) |

| Entry point | Words, lyrics, mood, scene | Software setup, instruments, plugins |

| Time to first full draft | Minutes (in my experience) | Often hours |

| Creative mindset | Interpret and react | Build and refine |

| Best stage of use | Ideation, early exploration | Final production and polish |

| Emotional feedback | Immediate and holistic | Gradual and technical |

| Risk | Output variability | Over-investment before clarity |

Neither approach replaces the other. They simply operate at different moments in the creative cycle. ToMusic.ai shortens the distance between “idea” and “audible reality.”

Personal Observations From Repeated Use

After running dozens of prompts across styles, a few patterns became clear:

1. Emotional clarity matters more than technical detail

Prompts that focused on feeling progression consistently outperformed prompts overloaded with genre labels.

2. Lyrics expose weaknesses quickly

When lyrics are involved, you immediately hear:

- awkward phrasing

- overcrowded syllables

- lines that look good on paper but don’t sing well

This feedback loop is valuable, even if you don’t keep the generated version.

3. Higher-quality outputs still require human judgment

Some generations sounded surprisingly complete. Others missed the mark. The difference wasn’t effort—it was alignment. The system doesn’t replace your taste; it reveals it.

Limitations Worth Acknowledging

To keep expectations realistic, a few constraints stood out:

- Results vary with prompt precision

Vague input leads to generic output. This isn’t a flaw so much as a mirror.

- Multiple generations are often necessary

In my experience, the strongest result was rarely the first. Iteration is part of the process.

- Vocals can be expressive but inconsistent

Certain lyrical rhythms or dense lines can challenge natural delivery. Minor lyric edits often fix this.

- Consistency requires structure

If you’re creating multiple tracks for the same project, reusable prompt templates help maintain identity.

Acknowledging these limits actually increases trust in the tool, because it frames it as a system to work *with*, not a magic button.

Prompt Strategies That Felt More Predictable

Narrative-first

Describe a scene, not a genre.

Example direction: “A quiet night drive that gradually turns hopeful as the city lights open up.”

Structure-aware

Tell the system how sections should behave.

Example direction: “Minimal verses, wider chorus, space after each chorus.”

Lyrics with intention

Provide lyrics, then explain how they should feel emotionally, not just musically.

These approaches consistently produced outputs that felt intentional rather than accidental.

Who Benefits Most From This Approach

From what I’ve seen, this workflow resonates most with:

- writers who want to hear their words as songs

- creators producing music for video or digital content

- teams needing fast musical directions rather than final masters

- producers looking for ideation sparks outside their habits

It’s especially useful when momentum matters more than perfection.

A Different Kind of Conclusion

ToMusic.ai doesn’t make creativity effortless. It makes it *movable*. Ideas that once stayed abstract become audible. And once something is audible, you can judge it, shape it, or let it go.

That may be the real value here: not automation, but acceleration of understanding. When music begins as language, and language becomes sound quickly, creation stops feeling like a barrier—and starts feeling like a conversation.